Passing the Turing Test

by Andrew Zhou, Psychology

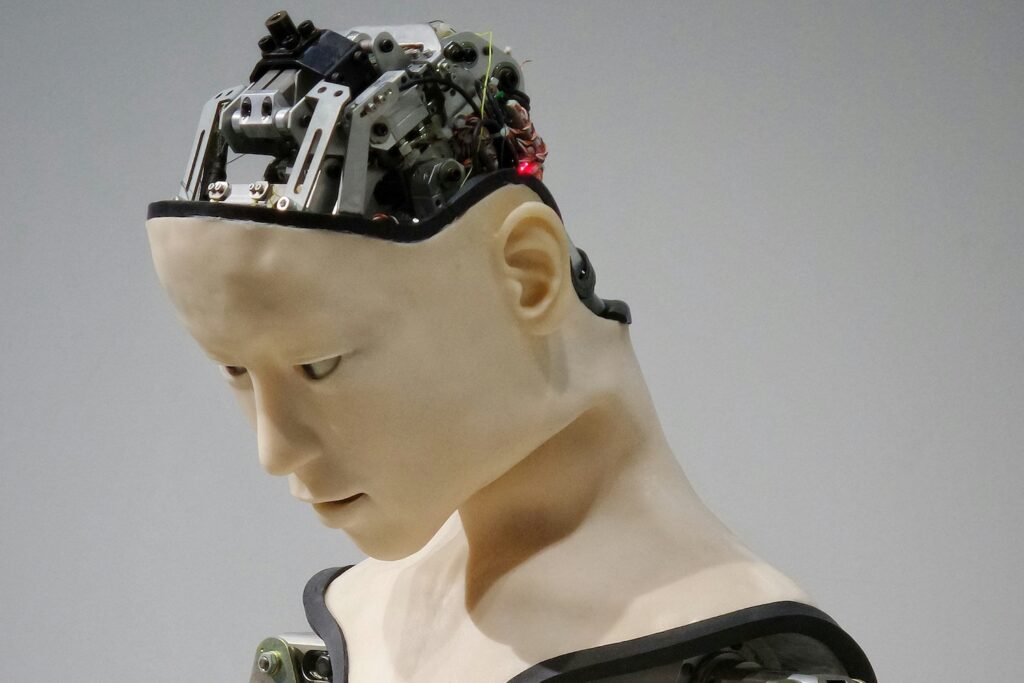

This essay explores the argument that passing the Turing test constitutes sufficient evidence that machines can think. The test assesses a machine’s ability to exhibit intelligent behavior that parallels human behavior. If the machine’s output is indiscernible from human behavior, then Turing asserts that the machine is thinking, just like a human. For all intents and purposes, the machine is practically demonstrating behavioral equivalence to humans. Therefore, if humans think, then these machines can also think.

The limitation in our ability to verify consciousness drives the dynamic between finding objective and consistent measures. Proponents of the Turing test reject the notion that internal processes such as consciousness, subjectivity, and physical composition are defining factors in the ability to think. Instead, external behavior is viewed as the most reliable metric for gauging intelligence. On the other side, critics believe that the Turing test merely captures the ability to imitate. They argue the machine is simply processing syntax, not genuine understanding or intentionality. However, this essay contends that such opposing claims contain inconsistencies and that the Turing test arises from necessity and functionality. Intelligence should depend on observed behavior rather than unrealistic metrics like direct access to mental states. If we accept behavioral evidence as sufficient for human intelligence, it should be equally sufficient for machines. In short, the Turing test is a compelling and empirical benchmark that clarifies the underlying boundaries of artificial intelligence.

Turing test, functionalism, behavior, intelligence

The lack of objective measures for what ‘thinking’ entails is an extensive limitation in our mapping of intelligence. Especially with the rising prevalence of technology, the debate around the constitution of intelligence has reached a crossroads. It is unclear which path leads to the truth of intelligence—is intelligence bound to the human mind, or can it exist in various forms?

To answer this question from a philosophical perspective, an attempt at an explicit definition of intelligence is a prerequisite. However, labeling intelligence is often indeterminate and problematic, making it all the more difficult to determine whether machines possess the ability to think or not. Almost all these efforts, both past and present, are derived from the renowned computer scientist Alan Turing. He devised a test that sought to demonstrate whether computers could ‘think.’ This famed Turing test is merely a modified version of the imitation game. That is, the computer is tasked with imitating human behavior to the extent of imperceptibility to the human judge. To pass the test, the computer must deceive humans into believing that they are interacting with another human and not a computer. The Turing test is considered highly compelling because it bypasses the ambiguous definitions of ‘thinking’ and instead focuses on the observable behavior of the computer. If we believe that humans can think, then we must also believe that a computer that indistinguishably imitates intelligent human behavior is thinking as well.

The Turing test is unique in that it explores an alternative approach to the question of artificial intelligence. In Turing’s review “Computing Machinery and Intelligence,” he admits that the standard conception of the question “Can machines think?” is difficult to answer. Turing instead frames the question in a new light: Can machines imitate human behavior to a degree that is indiscernible to other real humans? This way of asking the question is far more meaningful and pragmatic because we can actually assess the outcome. As a reminder, passing the Turing test requires the machine to convincingly imitate human behavior to deceive the human evaluator. If the evaluator is unable to sniff out the machine ‘thinking’ like a human, then the machine has at the very least behaved with human-like intelligence. Because fooling the evaluator falls under the assumption that human behavior requires human intelligence, the machine has displayed its capacity for such intelligence. The behavioral differences between the machine and human would have been invisible to the evaluator, hence their failure in identifying the imposter. In this sense, the Turing test undertakes the broader discussion of intelligence from a functionalist perspective. The functionalist lens on intelligence would prioritize the function—or role in a larger system—of a mental state, rather than its internal composition. Similarly, the Turing test does not capture the qualia, subjective experiences, or any other related inner processes; rather, it offers an objective metric of intelligence through external, observable evidence. Simply put, it uses behavior as evidence. Disregarding internal composition, the machine can be functionally equivalent to humans by behaving indistinguishably from them. According to functionalism, this type of machine behavior is no different from human intelligence. It is this notion that supplies the central claim: functionally, passing the Turing test is sufficient evidence that computers can think.

To further illustrate the implications of passing the Turing test, imagine a machine showing an output in which it has behaved indistinguishably from a human. Because the output is identical to human behavior, we would interpret that machine’s behavior as human ‘thinking.’ It is reasonable to assume that to behave like a human, you must think like a human. This behavioral equivalence indicates the ability of thought because, relating back to functionalism, mental states have a role in producing observable, intelligent behavior in humans. Since we cannot truly quantify the thinking states, behavior is the only observable evidence we have available to detect whether something is thinking. The behavior required to pass the Turing test must be a functionally-related result of the computer’s ‘thinking.’ The computer ‘thinking’ is causing the computer to behave like a human, just like how human thoughts dictate human behavior. Therefore, whatever process causing the computer’s behavior is functionally equivalent to ‘thinking’ in humans.

Rather than relying on ambivalent definitions to attribute intelligence to things, the Turing test provides an objective benchmark with a prescribed conclusion: passing the Turing test means the machine can think, at least functionally. Here is the formal layout of how the argument arrives at this conclusion:

(Premise 1) Anything that passes the Turing test can think;

(Premise 2) Machines can pass the Turing test;

(Conclusion) Machines can think.

Premise 1 has already been supported by the evidence that passing the Turing test requires functional equivalence to human thinking. As mentioned previously, indistinguishable human behavior is analogous to thinking. Looking at the structure of this argument, we can see how the conclusion follows logically from the premises. Importantly, the essence of ‘thinking’ relies upon the widely shared belief of human intelligence; a conventional view on intelligence holds that humans are intelligent and can ‘think.’ If we intuitively presume that other humans are capable of thought, we must also adopt the same attitude towards machines with imperceptible behavioral differences to humans. Because we accept that humans are ‘thinking,’ machines that act like humans must be ‘thinking’ as well. At the end of the day, we have no other means to judge the process of ‘thinking’ besides external behavior, so functional equivalence in behavior will have to suffice. In conclusion, behavior equivalence is sufficient evidence—machines can ‘think’ because they can pass the Turing test, a feat that requires ‘thinking.’

However, the Turing test is not without its critics. The primary criticism of artificial intelligence is the argument from consciousness. This objection refutes the premise stating anything that passes the Turing test can think. Essentially, it asserts that a machine passing the Turing test is not actually thinking but rather just following a program. The machine does not have consciousness, feeling, nor an understanding of its actions; it is indifferently following its code.

To provide an example of this objection, philosopher John Searle designed the Chinese Room thought experiment to visualize the argument from consciousness. In this scenario, an English-speaking person who does not know Chinese is inserted into a room with English instructions. Despite not knowing any Chinese, the person can produce responses in Chinese that deceive Chinese natives outside the room into believing they are fluent in the language. In his chapter titled “Minds, brains, and programs,” Searle uses this thought experiment to discuss the difference between true understanding and symbol manipulation. In this case, symbol manipulation refers to employing abstract representations of a language by formal rules, without necessarily understanding their meaning or semantics. The person inside the room does not understand the language yet can convincingly simulate and feign comprehension through symbol manipulation of the instructions. This person can be translated into the context of the Turing test: a machine can deceive human judges, but it does not genuinely understand its own behavior (Searle, 1980). Searle shows that while a real Chinese speaker would understand the semantics of the language, the person inside the room can only process and manipulate symbols. Proponents of the consciousness objection would insist that a real human is aware of their behavior, whereas a machine follows its code without such awareness. The objection operates on the idea that computers lack genuine understanding and that thinking in humans goes beyond mere symbol manipulation. Their stance on the Turing test firmly expresses that deception does not lead to the computer understanding its behavior; in sum, obeying code is not ‘thinking.’

While the argument from consciousness is a well-established challenge to the Turing test, it also appears to have several weaknesses. Some could ask, “So what? So what if machines do not have consciousness?” The main rebuttal to the argument from consciousness involves the sequestered nature of thoughts. Turing himself points out that humans themselves have no way of knowing that other people have consciousness (Turing, 1950). Our inability to directly access other people’s internal states is a crucial restriction to consider. After all, when we assess the thoughts of others, we can only evaluate their behavior and actions. The only evidence of their thoughts is through what we infer from their behavior. In the Turing test, we are left with indistinguishable evidence of thinking—through behavior—between both machine and human. Thus, it is insensible to rule out machines simply because they are machines. To claim that machines are ineligible for ‘thinking’ due to internal composition is hypocritical, as we don’t even use that standard for humans. Consciousness, human or machine, is something we cannot truly verify for certain. The consequence of requiring consciousness for evidence of thought would be unreasonable because then we could not even attribute intelligence to other humans. We would be violating our very perception of human intelligence. External behavior is the sole metric available to us, and the Turing test leverages it. To such an end, passing the Turing test aligns with how we evaluate thinking in humans. If we believe other humans are thinking through their intelligent behavior, then it is justified to believe the same of machines. That is to say, intelligent-behaving machines are also thinking.

Searle’s critique does not negate the functionalist perspective. Although he presupposes that thought requires consciousness, it should actually be the behavior produced by the system that carries weight, not the understanding from consciousness. His glaring flaw is the imposition of unrealistic standards for artificial cognition that are not applied consistently to human cognition. We do not dismiss ‘thinking’ in other humans just because we cannot access their subjective experience. We cannot literally experience other people’s minds. Rather, we simply accept their behavior as evidence for thought. Objections based on consciousness are null because, in practice, the ability to think can only be evaluated by observable behavior. Since we cannot directly examine consciousness, it is irrelevant in our consideration of ‘thinking.’ In reality, behavioral equivalence reveals that the machine meets the same standards for thinking in humans. Until we develop the technology to transfer consciousnesses, the Turing test will remain the most practical method to assess thought for the foreseeable future. It all comes down to the line drawn between Turing and Searle—observable behavior is tangible, and consciousness is not. We cannot objectively depend on something that is immeasurable. With that said, passing the Turing test establishes sufficient evidence that a machine can think.

To fully appreciate the original Turing test, let’s imagine a scenario in which machines can engage in other human behaviors. If the machine is playing chess, solving riddles, etc., why shouldn’t we consider that as thinking? These activities require ‘thinking’ as conceived in humans, and in computers, the equivalent states inside the machine are fulfilling the same role as human cognition. It is receiving and processing information and producing a response indistinguishable from a human. In fact, the computer may even perform better than us. For all practical purposes, the machine is thinking. It is engaging in behavior satisfying all our functional criteria for thoughts in humans. If a human replaced the computer in the aforementioned activities, we would certainly regard their behavior as a sign of thinking. To eliminate double standards, the optimal maintenance of consistency is through behavioral equivalence. In our evaluations, we search for intelligent behavior, and such scrutiny should apply to both machines and humans. Since we intuitively accept that, based on their behavior, humans can think despite not having direct access to their thoughts, then it is logical for us to match this position towards machines. Intelligence should not be determined on the basis of living cells or computer parts but rather on a test quantifying the lone benchmark we can rely upon. It is observable behavior, not physical makeup, that functionally defines thought. It is the manual of functionalism and behavior that serves as our turning point. Without it, we have no other footing, no groundwork, no signpost. Without the Turing test, we would be navigating blind.

References

Searle, J. R. (1980). Minds, brains, and programs. Behavioral and Brain Sciences, 3(3), 417–424. doi:10.1017/S0140525X00005756

Turing, A. M. (1950). Computing machinery and intelligence. Mind, 59, 433–460. https://doi.org/10.1093/mind/LIX.236.433

Citation Style: APA7